WordPress is a CMS widely used nowadays to develop sites. Even if it wasn’t created with this intent (blogging was actually the original reason), due to its simplicity and popularity, loads of people are using this software to build their websites.

When the end goal is no longer a blog but a pure website, and you’re not using any ‘search’ or ‘comment’ features which still require php functions, it could be worth considering a completely “static’ed” site which is then published in a Cloud Files Public Container. You could take advantage of the built-in CDN capability and forget about scaling on demand and all the limitations that WordPress has in the Cloud.

Having a static site as opposed to a PHP site means you can bypass security patch installations and avoid the possible risk of compromised servers.

And last but not least: cost reduction!

When you serve a static site from Cloud Files you pay only for the space utilized and the bandwidth – no more charges for Cloud Server(s) and better performance! So… why not give it a try?! 🙂

Side note: this concept can be applied to any CMS and any Cloud Platform. In this post I will use WordPress and Rackspace Public Cloud as examples.

Here is a list of user cases:

- Existing website built on WordPress; without search fields, comments, forms (dynamic content) which is still being updated on regular basis with extra pages.

- Brand new project based on WordPress where the end goal is to use WordPress as a Content Management System (CMS) to build a site, without any comment or search fields.

- Old website built on an old version of WordPress that cannot be updated due to some plugins that won’t work on newest versions of this CMS, with no dynamic functionalities required.

- Legacy site built on WordPress that is no longer being developed/updated but is still required to stay online, with no dynamic functionalities required.

To be able to make a site build using a CMS completely static, any dynamic functionality needs to be disabled (e.g. comments, forms, search fields…).

It’s possible to manually re-integrate some of them relying on trusted external sources (e.g. Facebook comments) with the introduction of some javascript. But this will require some editing of the static pages generated, something that is outside the scope of this article. However I wanted to mention this, as it could be a limitation in the decision to make the site static.

What do you need?

- a Cloud Load Balancer: this is just to keep a static IP

- a small Cloud Server LAMP stack (1/2GB max) where you will host your site

- a Cloud Files Public container to host the static version of the site

- access to your DNS

- some basic Linux knowledge to install some packages and run a few commands via command line

How to set this up?

Imagine you have www.mywpsite.com built on WordPress.

It’s a site that you keep updating generally once or twice a week.

Comments are all disabled and you don’t have any search fields.

You found this post and you’re interested in trying to save some money, improve performance and forget about patching and updating your CMS.

So, how can you achieve this?

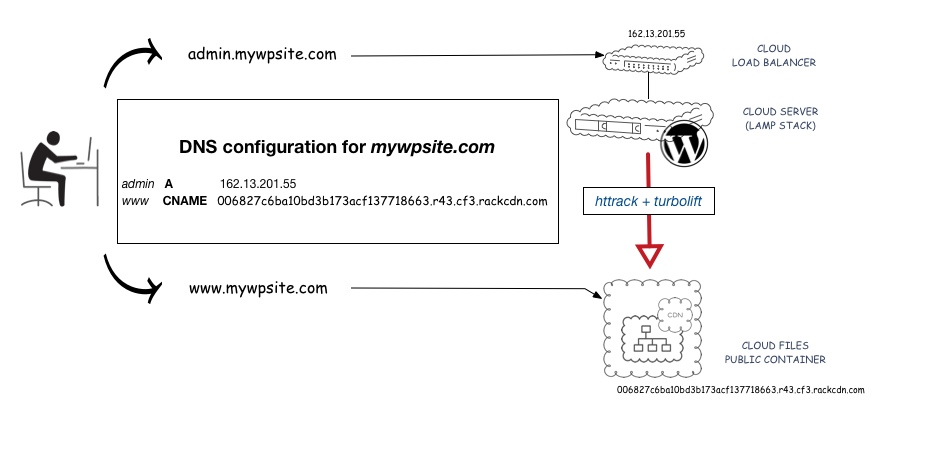

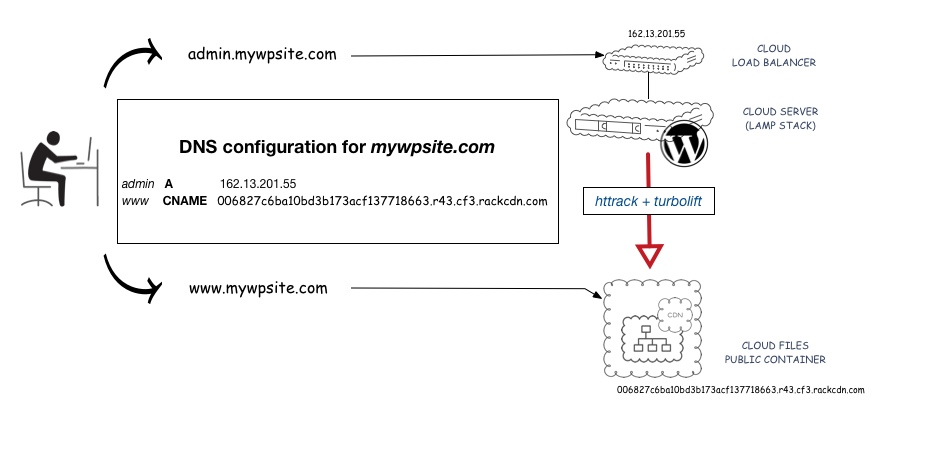

You will have to build a development infrastructure made up of a Cloud Load Balancer and a single Linux LAMP Cloud Server plus a Cloud Files Public Container for your production static site.

Your main domain www.mywpsite.com will need to point to the CNAME of the Cloud Files Container and no longer to your original site/server. You also need to create a new subdomain (e.g. admin.mywpsite.com) and point it to the development infrastructure: in specific, to the Cloud Load Balancer’s IP. You will then use admin.mywpsite.com/wp-admin/ instead of www.mywpsite.com/wp-admin/ to manage your site.

The only reason to use a Load Balancer in a single server’s solution is to keep the public IP, meaning you will no longer need to touch the DNS.

The goal is to image the server after you’ve completed making the changes and then delete it. In this way you won’t get charged for a resource that doesn’t serve any traffic and is there just for development. In this example, I’ve mentioned that changes are made a couple of times a week, so it’s cheaper and easier to always keep a Load Balancer with admin.mywpsite.com pointing to it, instead of a Cloud Server where you would be changing the DNS every time.

If you’re planning to keep the dev server up continuously, you could avoid using a Load Balancer entirely. However Cloud Servers might fail (we all know that Cloud is not designed to be resilient) so you may need to spin up a new server and have the DNS re-pointed again. In this case I do recommend keeping the DNS TTL as low as possible (e.g. 300 seconds).

Please note that the Site URL in your WordPress setup needs to be changed to admin.mywpsite.com. If you’re starting a new project you need to remember that the Site URL has to match the development subdomain that you have chosen. In our example, to admin.mywpsite.com.

Once you are happy with the site and everything works under admin.mywpsite.com, you will just need to run a command to make a static version of it, and another command to push the content onto the Cloud Files Container.

Once complete and verified, you can change the DNS for www.mywpsite.com to point to the Container, then image your dev server and delete it once the image has completed. You will create a new server from this image the next time you want to make a new change.

Here is a diagram that should help you to visualise the setup:

diagram

All clear?

So let’s do it!

How do you put this in practice?

Step by step guide (no downtime migration)

- Create a Cloud Server LAMP stack.

- Create a Cloud Load Balancer. Take note of its new IP.

- Add the server under the Load Balancer.

- Create a Cloud Files Public Container. Take note of its HTTP Public Link.

- Create the subdomain admin.mywpsite.com in your DNS and point it to the IP of your Cloud Load Balancer. Keep your www.mywpsite.com domain pointing to your live site to avoid downtime.

- Migrate WordPress files and database onto the new server and make sure NO changes on the live site will be done during the migration to avoid inconsistency of data.

- Change the Site URL to admin.mywpsite.com. Please note that this can be a bit tricky. Make sure all the references are properly updated. If it’s a new project, just install WordPress on it and set the Site URL directly to admin.mywpsite.com.

- Verify that you can access your site using admin.mywpsite.com and that also the wp-admin panel works correctly. Troubleshoot until this step is fully verified.

NOTE: At this stage we have a new development site setup and the original live site still serving traffic. Next steps will complete the migration, having live traffic directly served from the Cloud Files Container.

- Install httrack and turbolift on your server.

On a CentOS server you should be able to run the following:

yum -y install pip httrack

pip install turbolift

- [OPTIONAL, but recommended to avoid useless bandwidth charges]

Manually set /etc/hosts to resolve to admin.mywpsite.com locally, to force httrack to pull the content without going via public net:

echo "127.0.0.1 admin.mywpsite.com' >> /etc/hosts

- Combine these two commands to locally generate a static version of your admin.mywpsite.com and push it to the Cloud Files Container.

To achieve that, you can use a simple BASH script like the one below:

Script project: https://bitbucket.org/thtieig/wp2static/

#!/bin/bash

#########################################################

# Replace the quoted values with the right information: #

# ------------------------------------------------------#

USERNAME="yourusername"

APIKEY="your_api_key"

REGION="datacenter_region"

CONTAINERNAME="mycontainer"

DEVSITE="admin.mywpsite.com"

#########################################################

DEST=/tmp/$DEVSITE/

USER=$(echo "$USERNAME" | tr '[:upper:]' '[:lower:]')

DC=$(echo "$REGION | tr '[:upper:]' '[:lower:]')

# Clean up / create destination directory

ls $DEST > /dev/null 2&>1 && rm -rf $DEST || mkdir -p $DEST

# Create static copy of the site

httrack $DEVSITE -w -O "$DEST" -q -%i0 -I0 -K0 -X -*/wp-admin/* -*/wp-login*

# Upload the static copy into the container via local network SNET (-I)

turbolift -u $USER -a $APIKEY --os-rax-auth $DC -I upload -s $DEST/$DEVSITE -c $CONTAINERNAME

- Now you can test if the upload went well.

Just open the HTTP link of your Cloud Files Container in your browser and make sure the site is displayed correctly.

- If all works as expected, you can now go and make your www.mywpsite.com domain to be a CNAME of that HTTP link instead. Once done, your live traffic will be served by your Cloud Files Container and no longer from your old live site!

- And yes, you can now image your Cloud Server and delete it once completed. Next time you need to edit/add some content, just spin up another server from its latest image and add it under the Cloud Load Balancer.

Happy “static’ing“! 🙂