I have been working in IT since a while already, and I have faced multiple times customers that have accidentally lost the root password or the ssh key. In general, their servers is nicely up and running but they can’t connect anymore.

In the Cloud era, 99% of the time is going to be a virtual server, which makes things much easier. Of course, the same approach can be used with physical servers, but the “move disk” that I’m going to explain in a bit requires… literally… plug/unplug the disk 🙂

Firstly, you can connect from your pc to a remote Linux server using:

- username and password

- ssh key

In both cases, the Linux server has “something stored” in it, a file (all Linux is based on files)… or more, that we can potentially edit/replace if we have access to the disk.

Getting there, don’t we? 🙂

Cool. So, if we loose the password or the ssh key, and we still desperately need to access that server because we didn’t think about backing up (veeery bad – slap on your hands now!) or our laptop with the ssh key broke (didn’t you backed it up either?? Really? another slap!), one option is actually the following:

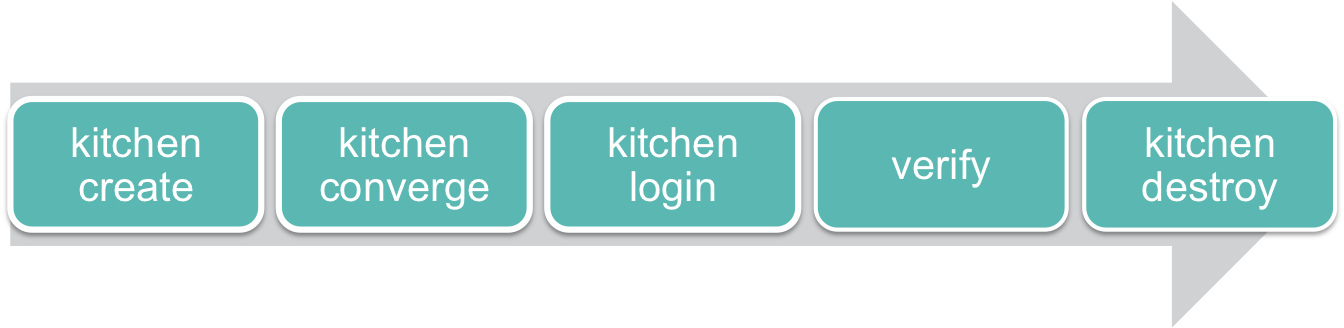

- Spin up a new server (we’re going to call it Saviour), and verify we can connect to it

- Remove the OS disk from the inaccessible server (called Desperate) and connect to Saviour

- Modify/replace files from Saviour onto Desperate’s disk

- Move back the Desperate’s disk into Desperate server

- Test if you can now connect to Desperate

- Delete Saviour

As I said before, this could work also with physical servers. The only bit that changes is that you literally need to remove the disk, install to the new server, and put it back. If you don’t have another server, you can use your laptop and a USB adapter, but you still need to have Linux. Anyway, I’m sure you can figure out how to adapt these instructions.

Now, let’s start, but DO IT AT YOUR OWN RISK.

I assume we have Saviour up and running. And we can connect via ssh. If not, stop reading and do something, come on! 🙂

Works? Niiice!

Next, we move Desperate’s disk to Saviour and we make sure it’s visible.

You can use fdisk -l to see if there is a new disk (it’s generally the latest entry).

Here an example:

root@saviour:~# fdisk -l Disk /dev/vda: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: 6CBB44F1-D559-9B42-A076-7C0EA2B76310 Device Start End Sectors Size Type /dev/vda1 262144 20971486 20709343 9.9G Linux root (x86-64) /dev/vda14 2048 8191 6144 3M BIOS boot /dev/vda15 8192 262143 253952 124M EFI System Partition table entries are not in disk order. Disk /dev/vdb: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: 6CBB44F1-D559-9B42-A076-7C0EA2B76310 Device Start End Sectors Size Type /dev/vdb1 262144 20971486 20709343 9.9G Linux root (x86-64) /dev/vdb14 2048 8191 6144 3M BIOS boot /dev/vdb15 8192 262143 253952 124M EFI System Partition table entries are not in disk order.

We need to get the device ID. Using the example above I can see that Desperate’s disk is /dev/vdb1. How do I know it? Well, bit of experience I guess. But mainly, in this case, disks are listed as vdx. The “x” starts from “a” and continues till “z“. Saviour has one single disk of 10GB, which is the first (a) – of course. The second (b), has to be our Desperate’s disk.

Let’s create a temporary mount point /desperate to mount that disk and let’s mount it!

I assume that our disk was formatted as ext4. If not, you can try to skip the -t option and let the mount command to guess the filesystem or pass the right parameter.

root@saviour:~# mkdir /desperate root@saviour:~# mount -t ext4 /dev/vdb1 /desperate root@saviour:~# ls /desperate/ bin boot dev etc home lib lib64 lost+found media mnt opt proc root run sbin srv sys tmp usr var

If the ls command worked, it means we’re good to continue!

Restore SSH KEY connectivity

If we were able to connect to Savoiur as root using ssh key, it means that the root user on Saviour is properly setup. So, we can copy the same configuration onto the Desperate’s disk to restore ssh key connectivity!

SSH key works storing the public key into a file called authorized_key in .ssh of the user you’re connecting to the server, in this case root.

Simply, let’s copy that file onto Desperate’s disk, in the same path!

root@saviour:~# cp /root/.ssh/authorized_keys /desperate/root/.ssh/authorized_keys root@saviour:~#

To be extremely sure, we can verify the copy using md5sum (OPTIONAL), and see that the number generated from both files is identical:

root@saviour:~# md5sum /root/.ssh/authorized_keys 17c1bba0ef42de1899b650b60dede12b /root/.ssh/authorized_keys root@saviour:~# md5sum /desperate/root/.ssh/authorized_keys 17c1bba0ef42de1899b650b60dede12b /desperate/root/.ssh/authorized_keys

If we just want to restore ssh key connectivity, we should be good to go, simply turning off Saviour, move back Desperate’s disk in Desperate server, and once up and running, trying to ssh to it using Desperate’s IP/fqdn.

If you have also username and password connectivity to restore, here an example just for root user – but it can be used for all the users, but I won’t explain here how to do so, as I would recommend to restore root and make all the changes from the restored server to avoid misconfigurations.

Restore SSH root password access

The password of a user is stored into /etc/shadow, of course, not in clear.

If you have forgotten the root password, let’s set a root password on Saviour, and use what we can find in that file to update the one on Desperate.

Using the command passwd, as root, we can immediately set/update the password – this time, take a note of it! 😉

And after have set it, we can get the line where it is stored, using grep, for example (or simply opening the file).

root@saviour:~# passwd New password: Retype new password: passwd: password updated successfully root@saviour:~# grep root /etc/shadow root:$y$j9T$usUs5.xlf7HQj90AaeYYN.$SR2YO6yamYA1L59bUa193ndPgiyt1nEgCfkgjXEAxJ9:19986:0:99999:7:::

In this example we need to replace the line that starts with root in /desperate/etc/shadow with this one: root:$y$j9T$usUs5.xlf7HQj90AaeYYN.$SR2YO6yamYA1L59bUa193ndPgiyt1nEgCfkgjXEAxJ9:19986:0:99999:7:::

You can use your favourite editor to do so. Make sure you change ONLY that line and save.

You can use the same grep command to verify that it matches.

root@saviour:~# grep root /etc/shadow root:$y$j9T$usUs5.xlf7HQj90AaeYYN.$SR2YO6yamYA1L59bUa193ndPgiyt1nEgCfkgjXEAxJ9:19986:0:99999:7::: root@saviour:~# grep root /desperate/etc/shadow root:$y$j9T$usUs5.xlf7HQj90AaeYYN.$SR2YO6yamYA1L59bUa193ndPgiyt1nEgCfkgjXEAxJ9:19986:0:99999:7:::

At this stage, the root user on Desperate should have the same password that you have set on Saviour.

Turn off Saviour, move the disk back to Desperate, turn it on and test! If all works, let’s thank Saviour, and delete it.

I hope this helps and yes… time to think about backups strategies 😉

Happy restoring! 😉