This is a collection of notes extracted by the Udemy course Docker Mastery.

Install docker

$ sudo curl -sSL https://get.docker.com/ | sh

- Docker has now a versioning like Ubuntu YY.MM

- prev Docker Engine => Docker CE (Community Edition)

- prev Docker Data Center => Docker EE (Enterprise edition) -> includes paid product and support

- 2 versions:

- Edge: released monthly and supported for a month.

- Stable: released quarterly and support for 4 months (extend support via Docker EE)

$ docker version

Client:

Version: 17.05.0-ce

API version: 1.29

Go version: go1.7.5

Git commit: 89658be

Built: Thu May 4 22:10:54 2017

OS/Arch: linux/amd64

Server:

Version: 17.05.0-ce

API version: 1.29 (minimum version 1.12)

Go version: go1.7.5

Git commit: 89658be

Built: Thu May 4 22:10:54 2017

OS/Arch: linux/amd64

Experimental: false

Client -> the CLI installed on your current machine

Server -> Engine always on, is the one that receives commands via API via the Client

New format:

docker <command> <subcommands> [opts]

Let’s play with Containers

Create a Nginx container:

$ docker container run --publish 80:80 --detach nginx

=> publish: connect local machine port (host) 80 to the port 80 of the container

=> detach: run the container in background

=> nginx: this is the image we want to run. Docker will look locally if there is an image cached; if not, it will get the default public ‘nginx’ image from Docker Hub, using nginx:latest (unless you specify a version/tag)

NOTE: every time you do ‘run’, docker Engine won’t clone the image but it will run an extra layer on top of the image, assign a virtual IP and doing the port binding (if requested) and

run whatever is specified under CMD in the Dockerfile

$ docker container run --publish 80:80 --detach nginx

c984b4231c5bf532efece5ee1a0d553182c4526e792a772d68d2c68204491d4e

$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c984b4231c5b nginx "nginx -g 'daemon ..." 12 seconds ago Up 11 seconds 0.0.0.0:80->80/tcp jolly_edison

$ docker container stop c98

c98

$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

$ docker container ls -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c984b4231c5b nginx "nginx -g 'daemon ..." 27 seconds ago Exited (0) 4 seconds ago jolly_edison

bf3de98723a2 nginx "nginx -g 'daemon ..." 2 minutes ago Exited (0) 2 minutes ago angry_agnesi

957a1a710145 nginx "nginx -g 'daemon ..." 5 minutes ago Exited (0) 4 minutes ago infallible_colden

https://github.com/docker/compose/releasesCURIOSITY: the name gets automatically created if not specified, using from a random open source list of emotions_scientists

Check what’s happening within a container

$ docker container top <container_name>

$ docker container logs <container_name>

$ docker container inspect <container_name>

$ docker container stat # global live view of containers' stats

$ docker container stat <container_name> # live view of specific container

$ docker container run --publish 80:80 --detach --name webhost nginx

5f8314d5d4e0907025578b696d5d1f5df3633620ee64575bfee5b8441054e168

$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5f8314d5d4e0 nginx "nginx -g 'daemon ..." 5 seconds ago Up 4 seconds 0.0.0.0:80->80/tcp webhost

$ docker container logs webhost

172.17.0.1 - - [06/Jun/2017:11:15:38 +0000] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:53.0) Gecko/20100101 Firefox/53.0" "-"

172.17.0.1 - - [06/Jun/2017:11:15:39 +0000] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:53.0) Gecko/20100101 Firefox/53.0" "-"

172.17.0.1 - - [06/Jun/2017:11:15:40 +0000] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:53.0) Gecko/20100101 Firefox/53.0" "-"

$ docker container top webhost

UID PID PPID C STIME TTY TIME CMD

root 4467 4449 0 12:15 ? 00:00:00 nginx: master process nginx -g daemon off;

systemd+ 4497 4467 0 12:15 ? 00:00:00 nginx: worker process

$ docker container ls -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5f8314d5d4e0 nginx "nginx -g 'daemon ..." 3 minutes ago Up 3 minutes 0.0.0.0:80->80/tcp webhost

c984b4231c5b nginx "nginx -g 'daemon ..." 6 minutes ago Exited (0) 6 minutes ago jolly_edison

bf3de98723a2 nginx "nginx -g 'daemon ..." 8 minutes ago Exited (0) 8 minutes ago angry_agnesi

957a1a710145 nginx "nginx -g 'daemon ..." 11 minutes ago Exited (0) 10 minutes ago infallible_colden

$ docker container rm 5f8 c98 bf3 957

c98

bf3

957

Error response from daemon: You cannot remove a running container 5f8314d5d4e0907025578b696d5d1f5df3633620ee64575bfee5b8441054e168. Stop the container before attempting removal or force remove

=> Safety mesure. You can’t remove running containers, unless using

-f

to force

The process that runs in the container is clearly visible and listed on the main host simply running

ps aux

.

In fact, a process running in a container is a process that runs on the host machine, but just in a separate user space.

$ docker container run --publish 80:80 --detach --name webhost nginx

6d6700cf4d98745cdb51a2267a0b1a69d33a60ed5a23786316af9f4993af793d

$ docker top webhost

UID PID PPID C STIME TTY TIME CMD

root 5455 5436 0 12:33 ? 00:00:00 nginx: master process nginx -g daemon off;

systemd+ 5487 5455 0 12:33 ? 00:00:00 nginx: worker process

$ ps aux | grep nginx

root 5455 0.0 0.0 32412 5168 ? Ss 12:33 0:00 nginx: master process nginx -g daemon off;

systemd+ 5487 0.0 0.0 32916 2500 ? S 12:33 0:00 nginx: worker process

user 5547 0.0 0.0 14224 968 pts/1 S+ 12:33 0:00 grep --color=auto nginx$ docker login

Change default container’s command

$ docker container run -it --name proxy nginx bash

=> t -> sudo tty; i -> interactive

=> ‘bash‘ -> command we want to run once the container starts

When you create this container, you change the default command to run.

This means that the nginx container started ‘bash’ instead of the default ‘nginx’ command.

Once you exit, the container stops. Why? Because a container runs UNTIL the main process runs.

Instead, if you want to run ‘bash’ as ADDITIONAL command, you need to use this, on an EXISTING/RUNNING container:

$ docker container exec -it <container_name> bash

How to run a CentOS minimal image to run (container)

$ docker container run -d -it --name centos centos:7

$ docker container attach centos

Quick cleanup [DANGEROUS!]

$ docker rm -f $(docker container ls -a -q)

Run CentOS container

$ docker container run -it --name centos centos

Unable to find image 'centos:latest' locally

latest: Pulling from library/centos

d5e46245fe40: Pull complete

Digest: sha256:aebf12af704307dfa0079b3babdca8d7e8ff6564696882bcb5d11f1d461f9ee9

Status: Downloaded newer image for centos:latest

[root@8bdc267ea364 /]#

List running containers

$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

86004f16905f nginx "nginx -g 'daemon ..." 12 minutes ago Up 12 minutes 80/tcp nginx2

53c2610e1caa nginx "nginx -g 'daemon ..." 14 minutes ago Up 14 minutes 80/tcp nginx

List ALL container (running and stopped)

$ docker container ls -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8bdc267ea364 centos "/bin/bash" About a minute ago Exited (127) 6 seconds ago centos

c6edf5df433d nginx "bash" 8 minutes ago Exited (127) 4 minutes ago proxy

86004f16905f nginx "nginx -g 'daemon ..." 12 minutes ago Up 12 minutes 80/tcp nginx2

53c2610e1caa nginx "nginx -g 'daemon ..." 14 minutes ago Up 14 minutes 80/tcp nginx

Start existing container and get prompt

$ docker container start -ai centos

[root@8bdc267ea364 /]#

ALPINE – minimal image (less than 4MB)

$ docker pull alpine

Using default tag: latest

latest: Pulling from library/alpine

2aecc7e1714b: Pull complete

Digest: sha256:0b94d1d1b5eb130dd0253374552445b39470653fb1a1ec2d81490948876e462c

Status: Downloaded newer image for alpine:latest

$ docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

centos latest 3bee3060bfc8 19 hours ago 193MB

nginx latest 958a7ae9e569 6 days ago 109MB

alpine latest a41a7446062d 11 days ago 3.97MB <<<<<<

httpd latest e0645af13ada 3 weeks ago 177MB

mysql latest e799c7f9ae9c 3 weeks ago 407MB

Alpine has NO bash in it. It comes with just

sh

.

You can use

apk

to install packages.

NOTE: You can run commands that are already existing/present in the image ONLY.

Docker NETWORK

Docker daemon creates a bridged network – using NAT (docker0/bridge).

Each container will get an interface part of this network => by default, each container can communicate between each other without the need to expose the port using

-p

. The

-p / --publish

is to “connect” the host’s port with the container’s port.

You can anyway create new virtual networks and/or add multiple interfaces, if needed.

Some commands:

$ docker container run -p 80:80 --name web -d nginx

39a1f4db967edb1bbfa2d15f2ad0bf0394c2ae40bb22266ac0c3873db2cbea7d

$ docker container port web

80/tcp -> 0.0.0.0:80

$ docker container inspect --format '{{ .NetworkSettings.IPAddress }}' web

172.17.0.2

$ docker network ls

NETWORK ID NAME DRIVER SCOPE

fb59a42ff104 bridge bridge local

25eda154bf6f host host local

effb256fdda7 none null local

=> Bridge – network interface where containers gets connected by default

=> Host – allows a container to attach DIRECTLY to the host’s network, bypassing the Bridge network

=> none – removes eth0 in the container, leaving only ‘localhost’ interface

$ docker network inspect bridge

[

{

"Name": "bridge",

"Id": "fb59a42ff104945c8e41510f51d8007f97a30734b64f862f342d1739bec721a7",

"Created": "2017-06-06T11:59:26.589409813+01:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"Containers": {

"39a1f4db967edb1bbfa2d15f2ad0bf0394c2ae40bb22266ac0c3873db2cbea7d": {

"Name": "web",

"EndpointID": "723b80d9709e7fb89612d6f16af4223867971a1070db740ff0a4ce4ad497d044",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

$ docker network create my_vnet

b0a0a4e6e529681dd6437a55a5495e928a7cb3af42d3e38298cb36b54c9892e0

=> by default it uses the ‘bridge’ driver

$ docker network inspect my_vnet --format '{{ .Containers }}'

map[9faec11e14697854b51275930817b03eb648baea0e2508195c2bf758d909d503:{nginx2 f889852f5c86bea984b28237f376e8ad2d1aa86335eed307209d25d44dfdba91 02:42:ac:12:00:02 172.18.0.2/16 }]

$ docker network connect my_vnet web

=> add new ntw interface part of my_vnet to container ‘web’

$ docker container inspect web | less

[...]

"Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "fb59a42ff104945c8e41510f51d8007f97a30734b64f862f342d1739bec721a7",

"EndpointID": "723b80d9709e7fb89612d6f16af4223867971a1070db740ff0a4ce4ad497d044",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.2",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:ac:11:00:02"

},

"my_vnet": {

"IPAMConfig": {},

"Links": null,

"Aliases": [

"39a1f4db967e"

],

"NetworkID": "b0a0a4e6e529681dd6437a55a5495e928a7cb3af42d3e38298cb36b54c9892e0",

"EndpointID": "344fd404d81f0e7df86984c3f856d70600eebe8109c6bdcb852577005e5ee5e1",

"Gateway": "172.18.0.1",

"IPAddress": "172.18.0.3",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:ac:12:00:03"

}

[...]

DNS

Because of the nature of containers (create/destroy), you cannot rely on IPs.

Docker uses the containers’ names as hostname. This feature is NOT by default if you

use the standard bridge, but it gets enabled if you create a new network.

Example where we run two Elasticsearch containers, on mynet using the alias feature:

$ docker container run -d --network my_vnet --net-alias search --name els1 elasticsearch:2

$ docker container run -d --network my_vnet --net-alias search --name els2 elasticsearch:2

--net-alias <name>

=> this helps in setting the SAME name (Round Robin DNS), for example, if you want to run a pool of search servers

To quickly test, you can use this command to hit “search” DNS name, automatically created:

$ docker container run --rm --net my_vnet centos curl -s search:9200

-> example where you can run a specific command from a specific image, and remove all the data related to the container (quick check). In this case, CentOs default has curl, so you can run it.

Please note the

--rm

flag. This creates a container that will get removed as soon as you do CTRL+C. Very handy to quickly test a container.

Running multiple time, you should be able to see the 2 elasticsearch node replying.

Docker IMAGES

Image is the app binaries + all the required dependencies + metadata

There is NO kernel/drivers (these are shared with the host OS).

Official images have:

- only ‘official’ in the description

- NO ‘/’ in the name

- extensive documentation

NON official have generally this format <organisationID>/<appname>

(e.g. mysql/mysql-server => this is not officially maintained by Docker but from MySQL team.)

Images are TAGs.

You can use tags to get the image that you want.

Images have multiple tags, so you might end up getting the same image, using

different tags.

IMAGE Layers

Images are designed to use Union file system

$ docker image history <image>

=> shows the changes in layers

unique SHA per layer.

When you create an image you start with a basic layer.

For example, if you pull two images based on Ubuntu 16.04, when you get the second image, you will get just the extra missing layers, as you have already downloaded and cached the basic Ubuntu 16.04 layer (same SHA).

=> you will never store the same image more than once on the filesystem

=> you won’t upload/download the layer that exists already on the other side

It’s like the concept of a VM snapshot.

The original container is read only. Whatever you change/add/modify/remove on the container that you run is stored in a rw layer.

If you run multiple containers from the same image, you will get an extra layer created per container, which stores just the differences between the original container image.

# Tag an image from nginx to myusername/nginx

$ docker image tag nginx myusername/nginx

$ docker login

Login with your Docker ID to push and pull images from Docker Hub. If you don't have a Docker ID, head over to https://hub.docker.com to create one.

Username: myusername

Password:

Login Succeeded

=> creates a file here:

~/.docker/config.json

Make sure to do

docker logout

on untrusted machines, to remove this file.

# Push the image

$ docker push myusername/nginx

The push refers to a repository [docker.io/myusername/nginx]

a552ca691e49: Mounted from library/nginx

7487bf0353a7: Mounted from library/nginx$ docker container run -d -it --name centos centos:7

$ docker container attach centos

8781ec54ba04: Mounted from library/nginx

latest: digest: sha256:41ad9967ea448d7c2b203c699b429abe1ed5af331cd92533900c6d77490e0268 size: 948

# Change tag and re-push

$ docker image tag myusername/nginx myusername/nginx:justtestdontuse

$ docker push myusername/nginx:justtestdontuse

The push refers to a repository [docker.io/myusername/nginx]

a552ca691e49: Layer already exists

7487bf0353a7: Layer already exists

8781ec54ba04: Layer already exists

justtestdontuse: digest: sha256:41ad9967ea448d7c2b203c699b429abe1ed5af331cd92533900c6d77490e0268 size: 948

=> it understands that the image already in the hub myusername/nginx is the same asmyusername/nginx:justtestdontuse, so it doesn’t upload any content (space saving), but it creates a new entry in the hub.

Dockerfile

This file describe how your container should be built. It generally uses a default image and you add your customisation. This is also best practise.

FROM -> use this as initial layer were to build the rest on top.

Best practise is to use an official image supported by Docker Hub, so you will be

sure that it is always up to date (security as well).

Any extra line in the file is an extra layer in your container. The use of

&&

among commands help to keep multiple commands on the same layer.

ENV -> are variable injected in the container (best practise as you don’t want any sensitive information stored within the container).

RUN -> are generally commands to install software / configure.

Generally there is a RUN for logging, to redirect logging to stdout/stderr. This is best practise. No syslog etc.

EXPOSE -> set which port can be published, which means, which ports I allow the container to receive traffic to. You still need the option

--publish (-p)

to actually expose the port.

CMD -> final command that will be executed (generally the main binary)

To build the container from the Dockerfile (in the directory where Dockerfile exists):

$ docker image build -t myusername/mynginx .

Every time one step changes, from that step till the end, all will be re-created.

This means that you should keep the bits that are changing less frequently on the top, and put on the bottom the ones that are changing more frequently, to make quicker the creation of the container.

# Dockerfile Example

# How extend/change an existing official image from Docker Hub

FROM nginx:latest

# highly recommend you always pin versions for anything beyond dev/learn

WORKDIR /usr/share/nginx/html

# change working directory to root of nginx webhost

# using WORKDIR is prefered to using 'RUN cd /some/path'

COPY index.html index.html

# replace index.html in /usr/share/nginx/html with the one currently stored

# in the directory where the Dockerfile is present

# There is no need to use CMD because it is already specified in the image

# nginx:latest, in FROM

# This container will inherit ALL (but ENVs) from the upstream image.

Example: CentOS container with Apache and custom index.html file:

# Dockerfile Example

FROM centos:7

RUN yum -y update && \

yum -y install httpd && \

yum clean all

EXPOSE 80 443

RUN ln -sf /dev/stdout /var/log/httpd/access.log \

&& ln -sf /dev/stderr /var/log/httpd/error.log

WORKDIR /var/www/html

COPY index.html index.html

CMD ["/usr/sbin/httpd","-DFOREGROUND"]

Example: Using Alpine HTTPD image and run custom index.html file:

# Dockerfile Example

FROM httpd:alpine

WORKDIR /usr/local/apache2/htdocs/

COPY index.html index.html

Copy all the content of the current directory into the WORKDIR directory

COPY . .

A container should be immutable and ephemeral. Which means that you could remove/delete/re-deploy without affecting the data (database, config files, key files etc…)

Unique data should be somewhere else => Data Volumes and Bind Mounts

Volumes

Need manual deletion -> preserve the data

In the Dockerfile the command

VOLUME

specifies that the container will create a new volume location on the host and assign this into the specified path in the container. All the files will be preserved if the container gets removed.

Let’s try using mysql container:

$ docker container run -d --name mysql -e MYSQL_ALLOW_EMPTY_PASSWORD=true mysql

b33d251935b0d22ae56ab569a32dd709ab2102359cf5f8297a3162383f226704

$ docker container inspect mysql

[...]

"Mounts": [

{

"Type": "volume",

"Name": "57fec8ec83c2cb32d4fbcfbcbacc2a6f84ae978e35d7ac0918aec8f8dbd8565a",

"Source": "/var/lib/docker/volumes/57fec8ec83c2cb32d4fbcfbcbacc2a6f84ae978e35d7ac0918aec8f8dbd8565a/_data",

"Destination": "/var/lib/mysql",

"Driver": "local",

"Mode": "",

"RW": true,

"Propagation": ""

}

],

[...]

"Volumes": {

"/var/lib/mysql": {}

},

[...]

This container was created using

VOLUME /var/lib/mysql

command in the Dockerfile.

Once the container got created, a new volume got created as well and mounted. Using

inspect

we can see those details.

$ docker container inspect mysql | less

$ docker volume ls

DRIVER VOLUME NAME

local 57fec8ec83c2cb32d4fbcfbcbacc2a6f84ae978e35d7ac0918aec8f8dbd8565a

$ docker volume inspect 57fec8ec83c2cb32d4fbcfbcbacc2a6f84ae978e35d7ac0918aec8f8dbd8565a

[

{

"Driver": "local",

"Labels": null,

"Mountpoint": "/var/lib/docker/volumes/57fec8ec83c2cb32d4fbcfbcbacc2a6f84ae978e35d7ac0918aec8f8dbd8565a/_data",

"Name": "57fec8ec83c2cb32d4fbcfbcbacc2a6f84ae978e35d7ac0918aec8f8dbd8565a",

"Options": {},

"Scope": "local"

}

]

$

Every time you create a container, it will create a new volume, unless you specify.

You can create/specify a specific volume to multiple containers using

-v <volume_name:container_path>

option flag.

$ docker container run -d --name mysql2 -e MYSQL_ALLOW_EMPTY_PASSWORD=true -v mysql-dbdata:/var/lib/mysql mysql

3f36be229a857b0898812c1614903d8bcf7000d8717510300c175bf2968a7829

$ docker container run -d --name mysql3 -e MYSQL_ALLOW_EMPTY_PASSWORD=true -v mysql-dbdata:/var/lib/mysql mysql

5f12cf8f4fd3d7606682474b130d0560197704a79c8ee56af54359ff79fbb555

$ docker volume ls

DRIVER VOLUME NAME

local 57fec8ec83c2cb32d4fbcfbcbacc2a6f84ae978e35d7ac0918aec8f8dbd8565a

local mysql-dbdata

$ docker volume inspect mysql-dbdata

[

{

"Driver": "local",

"Labels": null,

"Mountpoint": "/var/lib/docker/volumes/mysql-dbdata/_data",

"Name": "mysql-dbdata",

"Options": {},

"Scope": "local"

}

]

$

Checking the mysql2 and mysql3 containers:

$ docker container inspect mysql2

[...]

"Mounts": [

{

"Type": "volume",

"Name": "mysql-dbdata",

"Source": "/var/lib/docker/volumes/mysql-dbdata/_data",

"Destination": "/var/lib/mysql",

"Driver": "local",

"Mode": "z",

"RW": true,

"Propagation": ""

}

],

[...]

"Volumes": {

"/var/lib/mysql": {}

},

[...]

$ docker container inspect mysql3

[...]

"Mounts": [

{

"Type": "volume",

"Name": "mysql-dbdata",

"Source": "/var/lib/docker/volumes/mysql-dbdata/_data",

"Destination": "/var/lib/mysql",

"Driver": "local",

"Mode": "z",

"RW": true,

"Propagation": ""

}

],

[...]

"Volumes": {

"/var/lib/mysql": {}

},

[...]

Bind Mounting

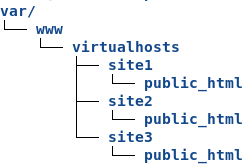

Mount a directory of the host on a specific container’s path.

Same flag as Volumes

-v

but it starts with a path and not a name.

Use

-v <host_path:container_path>

option flag.

This can be handy for a webserver, for example, that shares the /var/www folder stored locally on the host.

Docker Compose

- YAML file (replace shell script where you would save all the

docker run

commands)

- containers

- network

- volumes

- CLI docker-compose (locally)

This tool is ideal for local development and testing – not for production.

By default, Compose does print on stout logs.

On linux, you need to install the binary. It is available here.